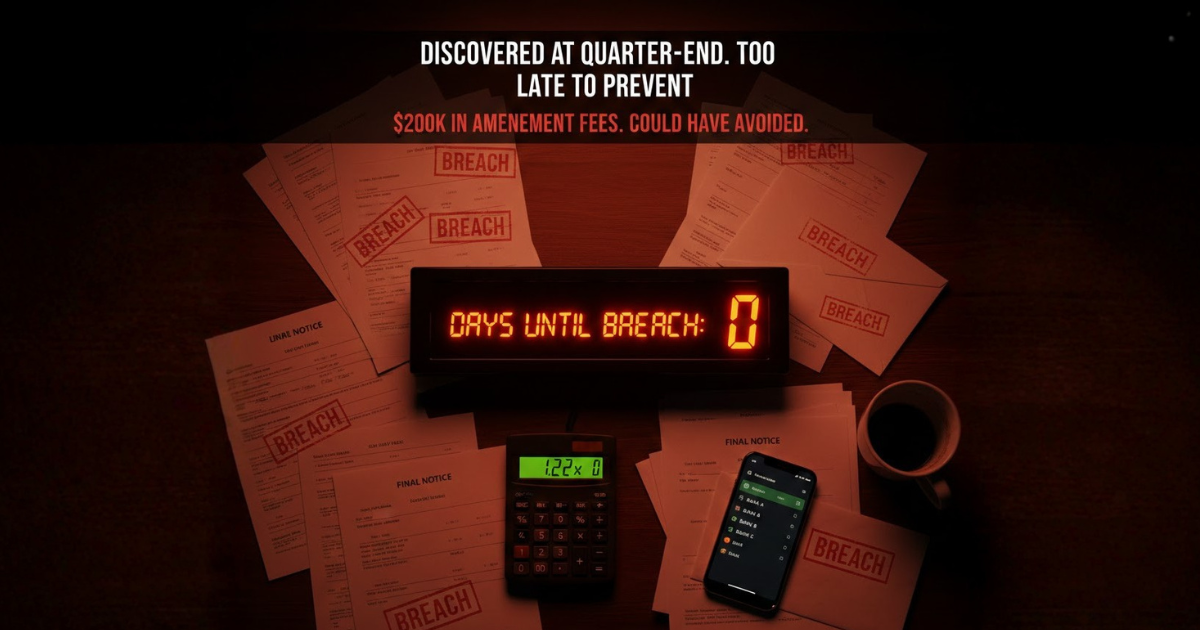

The $200K mistake: How maritime CFOs discover covenant breaches too late

Covenant Breaches Discovered After the Fact It's Thursday morning. Your quarterly covenant compliance certificates are due to lenders by Friday. Your...

Solutions Aligned with Maritime Roles

Model-Specific Business Solutions

Streamlined data insights

Optimized maritime voyage planning

Enhanced financial stability

Maritime-focused business banking

Access legal documents and policies.

Get solutions to all your questions.

3 min read

-1.png) Alex

:

Dec 26, 2025

Alex

:

Dec 26, 2025

Sanctions risk is now an operational risk for every ship operator. Recent enforcement guidance and high-profile enforcement actions show regulators expect continuous, auditable checks, not ad-hoc manual reviews. At the same time, deceptive tactics (AIS gaps, ship-to-ship transfers, rapid renamings and layered ownership) make naive list-matching unreliable. The answer isn’t just more data: it’s explainable AI (XAI) that flags risk and explains why, producing defensible, auditor-ready decisions that keep charters moving and banks reassured.

, and layered ownership) render naive list

Regulators and industry bodies have increased guidance specific to shipping. OFAC and other authorities have published maritime-focused sanctions advisories urging enhanced due diligence on vessel identity, ownership and ship-to-ship transfers - and stressing record keeping and escalation. These advisories make it clear that firms must demonstrate how they assessed a counterparty, not just that they compiled a list.

At the same time, the growth of shadow fleets and deceptive routing-documented in recent industry reports - means operators face genuine novel risks (false AIS, STS transfers, rapid re-flagging). Manual checks alone can’t scale.

Traditional sanctions screening systems produce a binary hit/no-hit or an opaque risk score. Explainable AI upgrades that output by providing structured reasons, e.g., “Beneficial owner name ≈ OFAC 92% match; AIS dark period of 14 days during suspected STS; recent IMO number change.” Good explanations combine (1) what matched, (2) why it matters (context), and (3) confidence. That three-part answer is what auditors and banks need to accept to clear a counterparty.

XAI tools commonly used in financial compliance (SHAP, LIME, and related techniques) can show which features drive a model’s score at the transaction, entity, or voyage level, enabling both local (this decision) and global (model behaviour) transparency. These are proven, well-established methods for rendering ML outputs interpretable.

Below is a practical, operator-friendly decision spine to embed inside a VMS/chartering platform:

Data enrichment & multi-source screening

Pull lists (OFAC, EU, UK, UN), vessel registries, ownership graphs, AIS/telemetry, port call histories and P&I/insurance watchlists. Use fingerprinting to detect IMO/name/flag changes. (Source consolidation reduces false negatives.)

Automated scoring + XAI rationale

The model scores a vessel or counterparty and returns an explanation: top contributing features, supporting evidence (snapshot of matched list entries, AIS timeline) and a confidence band.

Human-in-the-loop review

Present the XAI output to the chartering or compliance user in a single pane: reason codes (e.g., OwnershipMatch, AISGap, STSHistory), confidence, recommended action (Block / Escalate / Approve) and one-click evidence attachments.

Decision logging & audit trail

Record full inputs, the XAI explanation, the final decision, reviewer identity, timestamp and any override justification. This audit artefact is essential for regulators and banks.

Feedback loop

Capture reviewer corrections (false positive/negative) to retrain the model and reduce noise over time.

Evidence snapshot: small cards showing matched sanctions list entry, ownership graph match, AIS map overlay (with timestamps).

Explainability panel: ranked feature contributions (e.g., SHAP values) with plain-language translation (“Owner name similarity drove 60% of score”).

Confidence & provenance: show data source and last refresh time for each evidence piece.

One-click actions: Escalate to legal, Request enhanced due diligence, or Clear & document.

These UI elements convert model outputs into operational actions and make the decision defensible to auditors.

Explainability is only half the journey; model governance completes it. A shipping compliance XAI programme needs:

Documented model specs (purpose, limitations, data lineage).

Versioned training data & performance logs (to show non-discriminatory behaviour and stability).

Periodic back-testing (measure false positive trends and adverse impacts).

Access controls & approval flows (who can override and why).

These measures also align with growing regulatory expectations: the EU AI Act (and financial regulators) require transparency, technical documentation and human oversight for higher-risk AI systems - all relevant to sanctions screening. Firms using XAI in compliance should prepare technical documentation and explainability artifacts for audits.

Over-reliance on a single data source. Counterparty deception often exploits gaps between lists - use ownership graphs and AIS analytics.

Explanations that are technical but not actionable. Translate model contributions into business language (e.g., “Ownership match - high risk”) so charterers can make decisions.

No feedback loop. Without operator input, models don’t learn what is noise for your trading lanes. Capture overrides and retrain.

No provenance or stale data. Make it obvious when a source was last refreshed - stale lists + AIS gaps are a compliance risk.

Time-to-clear (%) for positive matches (how quickly genuine positives are escalated).

False positive rate (and reduction over time).

Override justification completeness (percent of overrides with documented reason).

Data freshness (time since last refresh for each data source).

Model stability (performance drift; back-test loss).

When a vessel is flagged:

Open the XAI panel. Read the top 3 reasons.

If “OwnershipMatch” is top reason, open the ownership graph and inspect beneficial owners.

If “AISGap/STSTransfer” appears, view the AIS timeline & satellite imagery overlay.

Decide: Clear (attach rationale), Escalate (legal), or Block (freeze payments/fixtures).

Always save the explanation snapshot - this is your audit file.

Sanctions screening is no longer a checkbox: it’s a competitive operational capability. Explainable AI lets operators achieve three critical outcomes simultaneously: speed (fewer false alarms), safety (higher detection of real risks), and defensibility (auditable explanations for every decision). For chartering desks and compliance teams, XAI is not a luxury - it’s the tool that turns an opaque risk process into an auditable, trustable business control

Covenant Breaches Discovered After the Fact It's Thursday morning. Your quarterly covenant compliance certificates are due to lenders by Friday. Your...

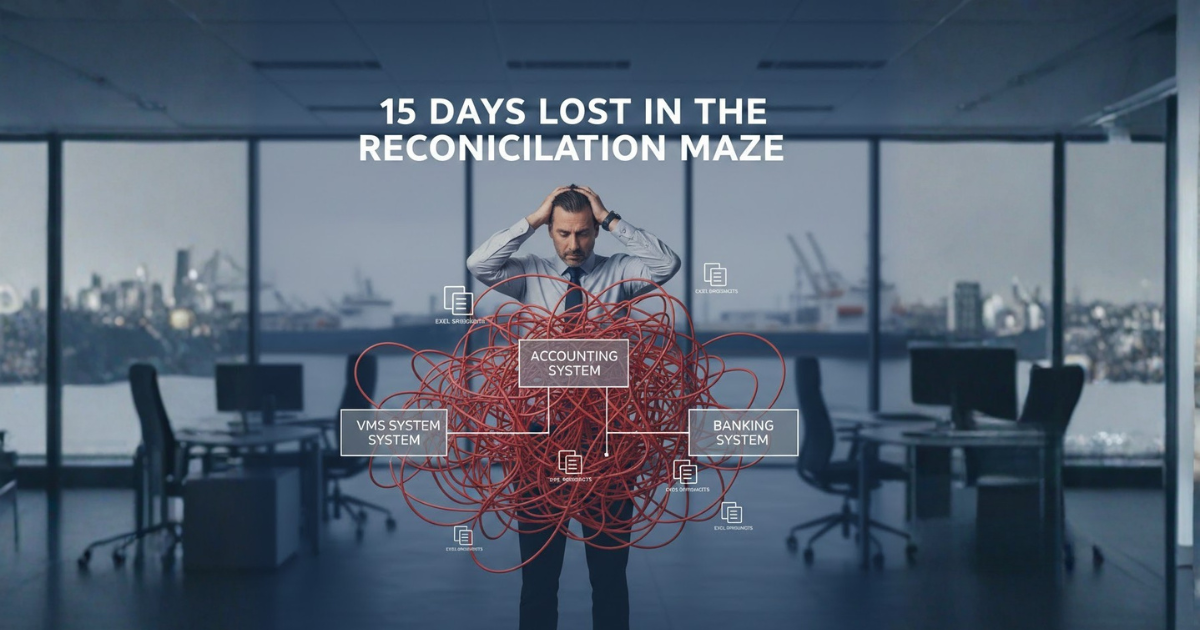

Maritime Month-End Close Takes Forever If you're a CFO at a shipping company managing dry bulk or tanker operations, you know this pain intimately:...

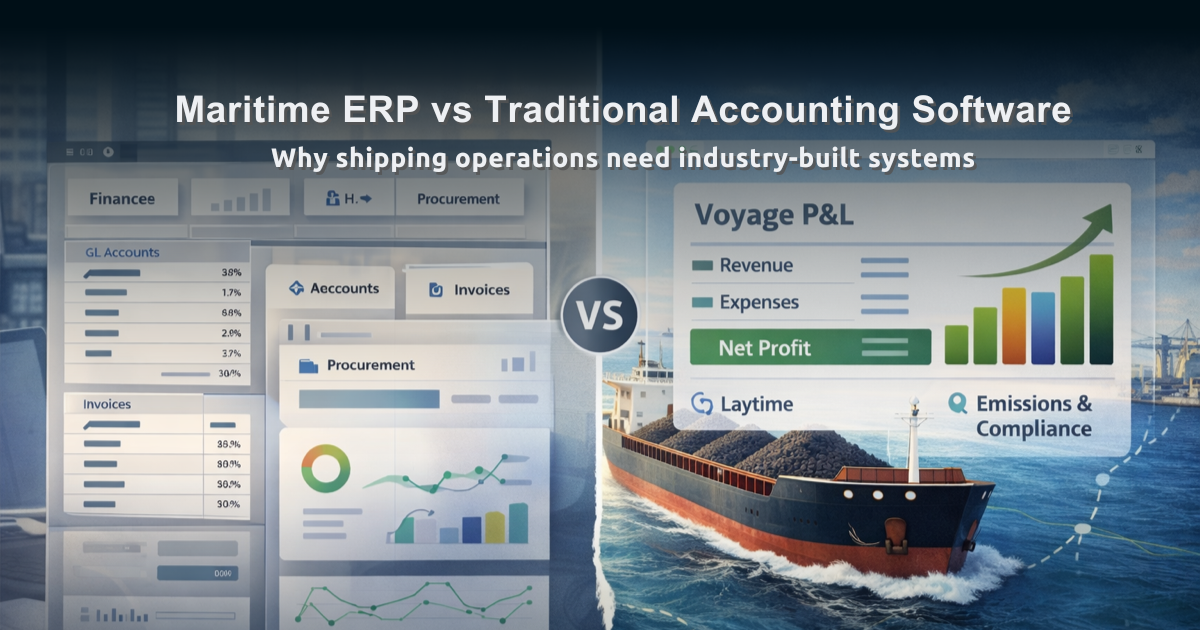

For CFOs and operations managers at dry bulk shipping companies, selecting the right software infrastructure represents one of the most consequential...